Outline

E2E test functions rather than unit test functions.

When developing a test program for NestJS developed backend server, I recommend to adapt E2E test paradigm instead of unit test paradigm. It’s because with the @nestia/sdk generated SDK library, E2E test functions can be much easier, safer and efficient for production than the traditional unit test functions.

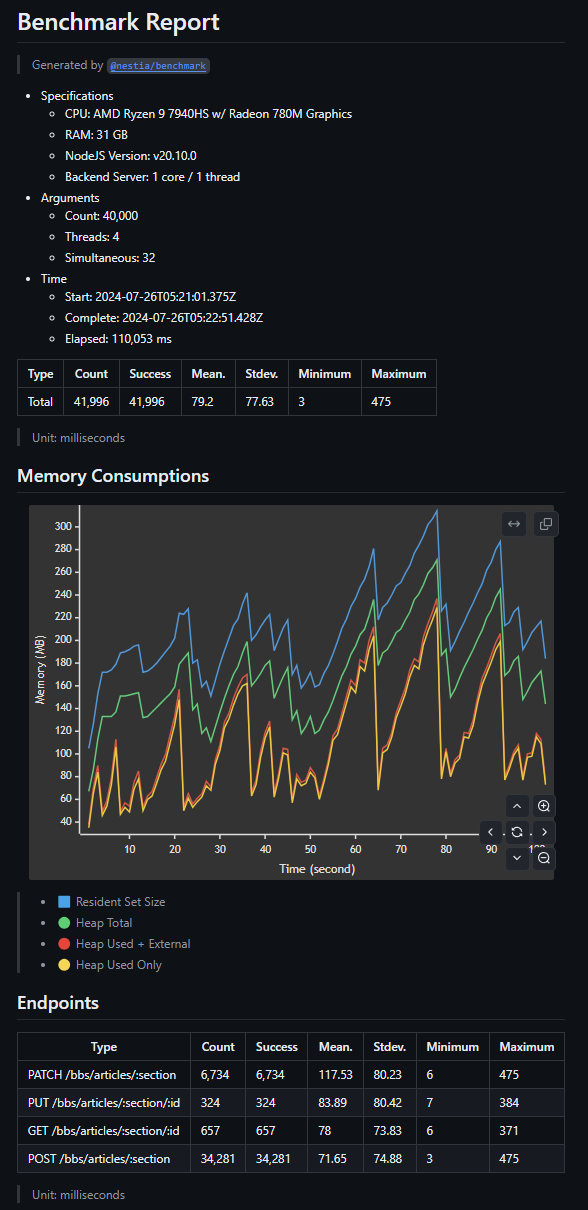

Furthermore, if you develop test functions utilizing the SDK library, you can easily switch the e2e test functions to the performance benchmark functions. Just by utilizing @nestia/e2e and @nestia/benchmark libraries, you can easily measure your NestJS developed backend server’s performance through the SDK library utilizing e2e test functions.

SDK library utilizing test functions can be used in the performance benchmark program

Efficient for Production

New era, age of E2E testing paradigm comes.

In the past era, backend developers had developed test programs following the unit test paradigm. It’s because traditional E2E test functions’ development could not take any advantage of compiled language’s type safety. As you can see from the below example code, E2E test functions had to write hard-coded fetch() function with string literals.

Besides, unit test could take advantages of compiled language’s type safety by importing related modules. Therefore, it was more efficient to develop test functions following the unit test paradigm. This is the reason why unit test paradigm had been loved in the past era.

import { TestValidator } from "@nestia/e2e";

import typia from "typia";

import { IBbsArticle } from "@samchon/bbs-api/lib/structures/bbs/IBbsArticle";

export const test_api_bbs_article_create = async (

host: string,

): Promise<void> => {

// In the traditional age, E2E test function could not take advantages

// of type safety of the TypeScript. Instead, have to write hard-coded

// `fetch()` function with string literals.

const response: Response = await fetch(`${host}/bbs/articles`, {

method: "POST",

headers: {

"Content-Type": "application/json",

},

body: JSON.stringify({

writer: "someone",

password: "1234",

title: "title",

body: "content",

format: "md",

files: [],

} satisfies IBbsArticle.ICreate),

});

const article: IBbsArticle = await response.json();

typia.assert(article);

const read: IBbsArticle = await (async () => {

const response: Response = await fetch(`${host}/bbs/articles/${article.id}`);

const article: IBbsArticle = await response.json();

return typia.assert(article);

})();

TestValidator.equals("written")(article)(read);

};However, in the new era, e2e test functions also can take advantages of the type safety. Just import SDK library generated by @nestia/e2e, and call API functions of it with TypeScript type hints. In this way, as both e2e test paradigm and unit test paradigm can take advantages of type safety, we have to consider which strategy is suitable for the production environment.

In here article, I recommend to adapt e2e test paradigm in the below reasons.

- suitable for CDD (Contract Driven Development)

- easy to develop and maintain

- much safer than unit testing due to its coverage

- can be used for the performance benchmark

- can guide client developers as an example code

Look at the below example code of new era’s e2e test function, and compare it with the traditional unit test function. As you can see, e2e test function actually tests the backend server’s behavior by calling the real API endpoints of it. Besides, unit test can’t test the backend server’s actual behavior. It just validates the provider’s behavior. This is the reason why new era’s e2e test function is safer than unit test function.

Also, such new era’s e2e test function can be provided to the client or frontend developers as an example code. It guides them how to call the backend server’s API endpoints through the SDK library. In this way, e2e test function can be used as a well-structured document for the client developers.

import { TestValidator } from "@nestia/e2e";

import typia from "typia";

import BbsApi from "@samchon/bbs-api";

import { IBbsArticle } from "@samchon/bbs-api/lib/structures/bbs/IBbsArticle";

export const test_provider_bbs_article_create = async (

connection: BbsApi.IConnection

): Promise<void> => {

// Unit test functions can't validate

// the backend server's actual behavior.

const article: IBbsArticle = await BbsApi.functional.bbs.articles.create(

connection,

{

writer: "someone",

password: "1234",

title: "title",

body: "content",

format: "md",

files: [],

},

);

typia.assert(article);

// This is the reason why I've adopted the e2e test paradigm

const read: IBbsArticle = await BbsApi.functional.bbs.articles.at(

connection,

article.id,

);

typia.assert(read);

TestValidator.equals("written")(article)(read);

};At last, the new era’s e2e test functions can be used for the performance benchmark without any extra dedication. As the e2e test function directly calls the API endpoints of the backend server, backend server performance benchmark program can be easily developed by utilizing them.

Those are the reasons why I recommend to adapt the new era’s e2e test paradigm instead of the traditional unit test paradigm. Those are the reason why I am insisting that e2e test functions are efficient for production. From now on, let’s see how to compose the e2e test functions for the NestJS developed backend server.

You can experience benchmark program utilizing e2e test functions.

Test Program Development

import { DynamicExecutor } from "@nestia/e2e";

import api from "@ORGANIZATION/PROJECT-api";

import { MyBackend } from "../src/MyBackend";

import { MyConfiguration } from "../src/MyConfiguration";

import { MyGlobal } from "../src/MyGlobal";

import { ArgumentParser } from "./helpers/ArgumentParser";

interface IOptions {

include?: string[];

exclude?: string[];

}

const getOptions = () =>

ArgumentParser.parse<IOptions>(async (command, _prompt, action) => {

// command.option("--mode <string>", "target mode");

// command.option("--reset <true|false>", "reset local DB or not");

command.option("--include <string...>", "include feature files");

command.option("--exclude <string...>", "exclude feature files");

return action(async (options) => {

// if (typeof options.reset === "string")

// options.reset = options.reset === "true";

// options.mode ??= await prompt.select("mode")("Select mode")([

// "LOCAL",

// "DEV",

// "REAL",

// ]);

// options.reset ??= await prompt.boolean("reset")("Reset local DB");

return options as IOptions;

});

});

async function main(): Promise<void> {

// CONFIGURATIONS

const options: IOptions = await getOptions();

MyGlobal.testing = true;

// BACKEND SERVER

const backend: MyBackend = new MyBackend();

await backend.open();

//----

// CLIENT CONNECTOR

//----

// DO TEST

const connection: api.IConnection = {

host: `http://127.0.0.1:${MyConfiguration.API_PORT()}`,

};

const report: DynamicExecutor.IReport = await DynamicExecutor.validate({

prefix: "test",

parameters: () => [{ ...connection }],

filter: (func) =>

(!options.include?.length ||

(options.include ?? []).some((str) => func.includes(str))) &&

(!options.exclude?.length ||

(options.exclude ?? []).every((str) => !func.includes(str))),

})(__dirname + "/features");

await backend.close();

const failures: DynamicExecutor.IReport.IExecution[] =

report.executions.filter((exec) => exec.error !== null);

if (failures.length === 0) {

console.log("Success");

console.log("Elapsed time", report.time.toLocaleString(), `ms`);

} else {

for (const f of failures) console.log(f.error);

process.exit(-1);

}

console.log(

[

`All: #${report.executions.length}`,

`Success: #${report.executions.length - failures.length}`,

`Failed: #${failures.length}`,

].join("\n"),

);

}

main().catch((exp) => {

console.log(exp);

process.exit(-1);

});Just make test functions utillizing SDK library, and export those functions with test_ prefixed names. And then if compose the main program of the test, all of the test functions would be automatically mounted and executed whenever you run the main test program.

If you want to experience the test program earlier, visit below playground website.

💻 https://stackblitz.com/~/github.com/samchon/nestia-start

- test_api_bbs_article_at: 149 ms - test_api_bbs_article_create: 30 ms - test_api_bbs_article_index_search: 1,312 ms - test_api_bbs_article_index_sort: 1,110 ms - test_api_bbs_article_update: 28 m

Performance Benchmark

import { DynamicBenchmarker } from "@nestia/benchmark";

import cliProgress from "cli-progress";

import fs from "fs";

import os from "os";

import { IPointer } from "tstl";

import { MyBackend } from "../../src/MyBackend";

import { MyConfiguration } from "../../src/MyConfiguration";

import { MyGlobal } from "../../src/MyGlobal";

import { ArgumentParser } from "../helpers/ArgumentParser";

interface IOptions {

include?: string[];

exclude?: string[];

count: number;

threads: number;

simultaneous: number;

}

const getOptions = () =>

ArgumentParser.parse<IOptions>(async (command, prompt, action) => {

// command.option("--mode <string>", "target mode");

// command.option("--reset <true|false>", "reset local DB or not");

command.option("--include <string...>", "include feature files");

command.option("--exclude <string...>", "exclude feature files");

command.option("--count <number>", "number of requests to make");

command.option("--threads <number>", "number of threads to use");

command.option(

"--simultaneous <number>",

"number of simultaneous requests to make",

);

return action(async (options) => {

// if (typeof options.reset === "string")

// options.reset = options.reset === "true";

// options.mode ??= await prompt.select("mode")("Select mode")([

// "LOCAL",

// "DEV",

// "REAL",

// ]);

// options.reset ??= await prompt.boolean("reset")("Reset local DB");

options.count = Number(

options.count ??

(await prompt.number("count")("Number of requests to make")),

);

options.threads = Number(

options.threads ??

(await prompt.number("threads")("Number of threads to use")),

);

options.simultaneous = Number(

options.simultaneous ??

(await prompt.number("simultaneous")(

"Number of simultaneous requests to make",

)),

);

return options as IOptions;

});

});

const main = async (): Promise<void> => {

// CONFIGURATIONS

const options: IOptions = await getOptions();

MyGlobal.testing = true;

// BACKEND SERVER

const backend: MyBackend = new MyBackend();

await backend.open();

// DO BENCHMARK

const prev: IPointer<number> = { value: 0 };

const bar: cliProgress.SingleBar = new cliProgress.SingleBar(

{},

cliProgress.Presets.shades_classic,

);

bar.start(options.count, 0);

const report: DynamicBenchmarker.IReport = await DynamicBenchmarker.master({

servant: `${__dirname}/servant.js`,

count: options.count,

threads: options.threads,

simultaneous: options.simultaneous,

filter: (func) =>

(!options.include?.length ||

(options.include ?? []).some((str) => func.includes(str))) &&

(!options.exclude?.length ||

(options.exclude ?? []).every((str) => !func.includes(str))),

progress: (value: number) => {

if (value >= 100 + prev.value) {

bar.update(value);

prev.value = value;

}

},

stdio: "ignore",

});

bar.stop();

// DOCUMENTATION

try {

await fs.promises.mkdir(`${MyConfiguration.ROOT}/docs/benchmarks`, {

recursive: true,

});

} catch {}

await fs.promises.writeFile(

`${MyConfiguration.ROOT}/docs/benchmarks/${os

.cpus()[0]

.model.trim()

.split("\\")

.join("")

.split("/")

.join("")}.md`,

DynamicBenchmarker.markdown(report),

"utf8",

);

// CLOSE

await backend.close();

};

main().catch((exp) => {

console.error(exp);

process.exit(-1);

});You can re-use test functions for the performance benchmark program.

Just compose the benchmark’s main and servant programs like above pointing the test functions’ directory, then the benchmark program would utilize those test functions for the backend server performance measurement. The benchmark program will make multiple worker threads, and let them to make requests to the backend server simultaneously through the test functions.

If you want to experience the benchmark program earlier, visit below playground website.

💻 https://stackblitz.com/~/github.com/samchon/nestia-start

? Number of requests to make 1024 ? Number of threads to use 4 ? Number of simultaneous requests to make 32 ████████████████████████████████████████ 100% | ETA: 0s | 3654/1024