Outline

Benchmark your backend server with e2e test functions.

If you’ve developed e2e test functions utilizing SDK library of @nestia/sdk, you can re-use those e2e test functions in the benchmark program supported by @nestia/benchmark. The benchmark program will run these e2e test functions in parallel and randomly to measure the performance of your backend server.

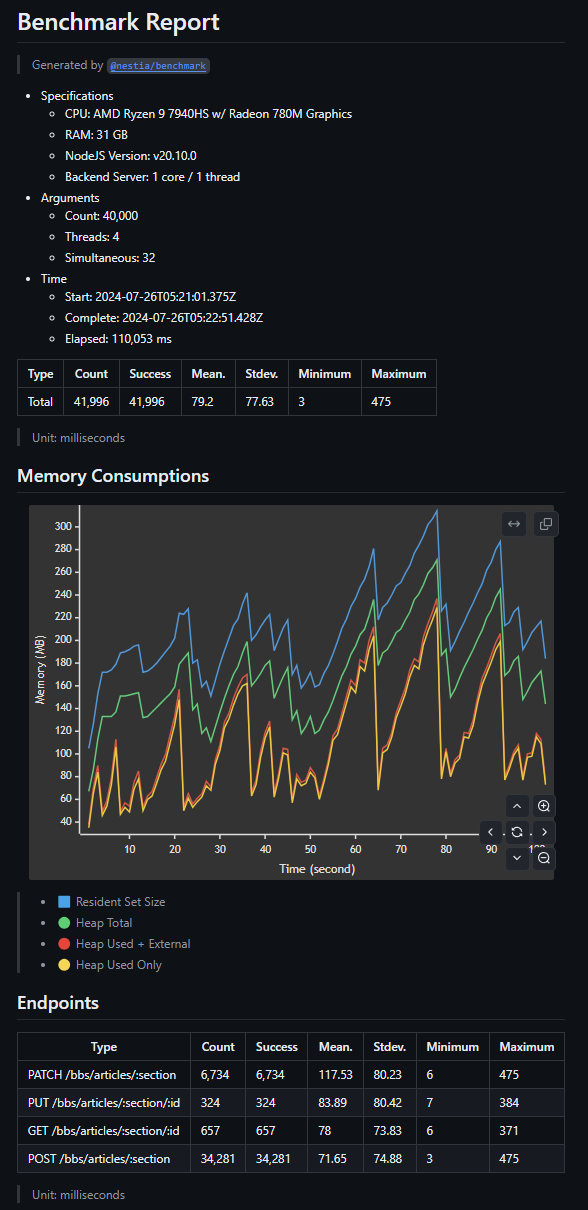

If you want to pre-experience the benchmark program utliizng the e2e test functions of @nestia/sdk, visit below playground website. Also, here is the benchmark report example generated by the benchmark program of @nestia/benchmark executed in the below playground link.

💻 https://stackblitz.com/~/github.com/samchon/nestia-start

? Number of requests to make 1024 ? Number of threads to use 4 ? Number of simultaneous requests to make 32 ████████████████████████████████████████ 100% | ETA: 0s | 3654/1024

Main Program

import { DynamicBenchmarker } from "@nestia/benchmark";

import cliProgress from "cli-progress";

import fs from "fs";

import os from "os";

import { IPointer } from "tstl";

import { MyBackend } from "../../src/MyBackend";

import { MyConfiguration } from "../../src/MyConfiguration";

import { MyGlobal } from "../../src/MyGlobal";

import { ArgumentParser } from "../helpers/ArgumentParser";

interface IOptions {

include?: string[];

exclude?: string[];

count: number;

threads: number;

simultaneous: number;

}

const getOptions = () =>

ArgumentParser.parse<IOptions>(async (command, prompt, action) => {

// command.option("--mode <string>", "target mode");

// command.option("--reset <true|false>", "reset local DB or not");

command.option("--include <string...>", "include feature files");

command.option("--exclude <string...>", "exclude feature files");

command.option("--count <number>", "number of requests to make");

command.option("--threads <number>", "number of threads to use");

command.option(

"--simultaneous <number>",

"number of simultaneous requests to make",

);

return action(async (options) => {

// if (typeof options.reset === "string")

// options.reset = options.reset === "true";

// options.mode ??= await prompt.select("mode")("Select mode")([

// "LOCAL",

// "DEV",

// "REAL",

// ]);

// options.reset ??= await prompt.boolean("reset")("Reset local DB");

options.count = Number(

options.count ??

(await prompt.number("count")("Number of requests to make")),

);

options.threads = Number(

options.threads ??

(await prompt.number("threads")("Number of threads to use")),

);

options.simultaneous = Number(

options.simultaneous ??

(await prompt.number("simultaneous")(

"Number of simultaneous requests to make",

)),

);

return options as IOptions;

});

});

const main = async (): Promise<void> => {

// CONFIGURATIONS

const options: IOptions = await getOptions();

MyGlobal.testing = true;

// BACKEND SERVER

const backend: MyBackend = new MyBackend();

await backend.open();

// DO BENCHMARK

const prev: IPointer<number> = { value: 0 };

const bar: cliProgress.SingleBar = new cliProgress.SingleBar(

{},

cliProgress.Presets.shades_classic,

);

bar.start(options.count, 0);

const report: DynamicBenchmarker.IReport = await DynamicBenchmarker.master({

servant: `${__dirname}/servant.js`,

count: options.count,

threads: options.threads,

simultaneous: options.simultaneous,

filter: (func) =>

(!options.include?.length ||

(options.include ?? []).some((str) => func.includes(str))) &&

(!options.exclude?.length ||

(options.exclude ?? []).every((str) => !func.includes(str))),

progress: (value: number) => {

if (value >= 100 + prev.value) {

bar.update(value);

prev.value = value;

}

},

stdio: "ignore",

});

bar.stop();

// DOCUMENTATION

try {

await fs.promises.mkdir(`${MyConfiguration.ROOT}/docs/benchmarks`, {

recursive: true,

});

} catch {}

await fs.promises.writeFile(

`${MyConfiguration.ROOT}/docs/benchmarks/${os

.cpus()[0]

.model.trim()

.split("\\")

.join("")

.split("/")

.join("")}.md`,

DynamicBenchmarker.markdown(report),

"utf8",

);

// CLOSE

await backend.close();

};

main().catch((exp) => {

console.error(exp);

process.exit(-1);

});To compose the benchmark program of @nestia/benchmark on your backend application, you have to create two executable TypeScript programs; the main program and the servant program.

The main program is executed by user (npm run benchmark command in the playground project), and centralizes the benchmark progress. It creates multiple servant programs parallel, and aggregate the benchmark results from them. After the aggregation, it publishes the benchmark report with markdown format.

The servant program is executed by the main program multiply in parallel, and actually runs the e2e test functions for benchmarking. Composing the servant program, you have to specify the directory where the e2e test functions are located. Also, composing the main program of benchmark, you also have to specify the file location of the servant program.

If you want to see more benchmark program cases, visit below links:

| Project | Main | Servant | Report |

|---|---|---|---|

samchon/nestia-start | index.ts | servant.ts | REPORT.md |

samchon/backend | index.ts | servant.ts | REPORT.md |

Test Functions

import { RandomGenerator, TestValidator } from "@nestia/e2e";

import { v4 } from "uuid";

import api from "@ORGANIZATION/PROJECT-api/lib/index";

import { IBbsArticle } from "@ORGANIZATION/PROJECT-api/lib/structures/bbs/IBbsArticle";

export async function test_api_bbs_article_create(

connection: api.IConnection,

): Promise<void> {

// STORE A NEW ARTICLE

const stored: IBbsArticle = await api.functional.bbs.articles.create(

connection,

"general",

{

writer: RandomGenerator.name(),

title: RandomGenerator.paragraph(3)(),

body: RandomGenerator.content(8)()(),

format: "txt",

files: [

{

name: "logo",

extension: "png",

url: "https://somewhere.com/logo.png",

},

],

password: v4(),

},

);

// READ THE DATA AGAIN

const read: IBbsArticle = await api.functional.bbs.articles.at(

connection,

stored.section,

stored.id,

);

TestValidator.equals("created")(stored)(read);

}Developing e2e test functions are very easy. Just make e2e based test function utilizing @nestia/sdk generated SDK library, and exports the function with test_ prefixed name (If you’ve configured another prefix property in the benchmark main program, just follow the configuration).

Also, make the function to have parameter(s) configured in the servant program of the benchmark. As above test functions are examples of playground project that has configured to have only one connection parameter, All of them have the only one parameter connection.

After composing these e2e test functions, just execute the benchmark main program. In the playground project, it can be executed by npm run benchmark command. The benchmark program will run these e2e test functions in parallel and randomly, and measure the performance of your backend server.

git clone https://github.com/samchon/nestia-start

cd nestia-start

npm install

npm run build:test

npm run benchmark